Government systems are moving to a COTS and reuse paradigm. The reasons for this are many: Budget reduction efforts in government, maturity in COTS systems, convergence on standard hardware/software suites in industry and Government, economies of scale in large purchase agreements.

It is easy to co-host COTS and targeted reusable software components. Such efforts may meet up to half of a systemís requirements, leaving the appealing prospect of implementing only the other half. Unfortunately, the other half of the requirements are usually the hard-to-meet ones--elimination of single points of failure, coordination of activities on disparate nodes, connecting products to support workflow, and even requirements to implement functions which have never been operationally prototyped.

Reusing existing software also brings the problems of initiating similar operations from the same software location, and allowing experimental software to be quickly and usefully integrated for evaluation.

Further consideration must be given to what COTS or reusable components are suitable and what role such products should play in a system.

If a system is being built with the knowledge that external developers are creating components for it, what features and design should the system provide to support this? What evaluation of those added components is necessary and appropriate? What is needed to integrate such components into the system? How can these components present a unified look and feel to the operators?

ASAS faced these challenges and has delivered a system built on a set of concepts to meet them. The ASAS approach appears successful for systems of its sort--command and control workstation-based systems.

This paper spells out some of the details of the ideas driving the ASAS implementation.

ASAS is used by analysts to gather, evaluate, and disseminate information. Analyst activities build on a set of data tables that are shared and updated by both background processes and results of analyst interactive manipulation. Analysts create, manipulate, and distribute map depictions of the current and planned situations, determine what incoming events and messages require immediate attention, changes to the current situation, and/or sending out messages to others.

Tools used in these activities include maps, message processors, criteria tools, intelligence fusion tools, imagery viewing tools, web browsers, office products, electronic mail, FTP tools, directory tools, backup and restoration tools, and communications management tools for other protocols used.

A prime focus of ASAS development was to incorporate the products of Army reuse efforts, and to integrate COTS products where these made sense to lower the overall cost of ownership, while providing significant benefits of ownership. A selection of these products and their levels of integration is shown in Table 1.

As a result of this focus, ASAS has applications and interfaces to programs written in C, C++, Ada, FORTRAN, Tcl/Tk, all 3 popular shells, ELF, Applix Builder, Oracle Forms, and AIE.

In the same time period, ASAS reuse and integration efforts have moved from the Army Tactical Command And Control System (ATCCS) Common ATCCS System Software (CASS) reuse efforts to the Defense Information Initiative (DII) Common Operating Environment (COE).

Commercial off-the-shelf products are attractive because their costs are spread over a large number of users, leading to lower prices and an established mechanism for improving the product in future releases. In addition, natural market forces drive interoperability standards that these products support. The more sophisticated COTS products have an additional benefit--they provide extension mechanisms for embedding customized functionality into the product. However, the developer must look beyond COTS functional capabilities and consider life cycle questions.

- A Very Simple Example

- What are the supported standards?

- What is the viability of this product?

- Is this product likely to support all the platforms that may be needed in the future?

- How could use of this product grow in meeting other requirements

- Is this product one to build on, or one to insert?

- How does licensing work? What is required to support it?

- Running COTS products

- The Spectrum Of Products

- Can I communicate with the product from all my development languages?

- What is required to start up the COTS product?

- What is required to shut down the COTS product?

- How do signals affect the product?

- How do failures affect the product?

- What do exit statuses mean for the product?

- What boot-time environment requirements does this product impose?

- Distributed Computing Environment

- Oracle

- ELT3000

- Applix Data

- Create a column for Army grid coordinates (MGRS) if the table had latitude and longitude columns

- Support plotting table data to the map if location is available.

- Support generation of common messages from selected rows of data

- Retrieve associated message or messages from selected rows of data if a journal key is available.

Thus, a word processor is easy to embed in a system; but the designers must determine how the larger life cycle questions affect its choice and use. In ASAS, the word processor was required to read and write documents in a variety of formats (those prepared by other systems ASAS interacts with), and have documents be easily mailed. Several word processors are able to meet these requirements. During operational analysis, it also was determined that a small number of document templates would be desirable. Thus, selecting a flexible word processor that had more capability than the minimally required characteristics gave an easy implementation path for a derived requirement identified later.

The word processor selected was part of an office suite, specifically selected because it supported major Unix platforms and was the suite selected for at least one program ASAS interoperates with. Other office suites existed, and each had its proponents; thus, it seemed likely that the office suite would change over the life of the program.

|

Product |

Insert or Build On |

Purpose in System |

|

Transarc DCE |

Build on |

Architecture and location independent interprocess communications |

|

Oracle RDBMS |

Insert |

Data storage and management |

|

Applix Words, Draw, Spreadsheet, Mail |

Insert |

Office Suite |

|

Applix Data |

Build On |

Database browsing, customized to meet system requirements |

|

ELT 3000 |

Build On |

NITF Imagery viewing, annotation, and format conversion |

|

Netscape Server and Client |

Insert |

Web-based information sharing paradigm. A few CGI scripts make product of other parts of the system available from the web. |

|

HP Extensible SNMP Agent |

Insert |

Provide status monitoring information on system identity, behavior, performance |

|

WABI/Microsoft Windows |

Insert |

Provide basis for hosting related products |

|

Triteal CDE |

Build On |

Provide common desktop and launch mechanism for all software |

The word processor was deemed to be a product to insert, not build on, so extension of the tool for additional tasks was not considered. Normally, this would have precluded using the word processor for building other capabilities into the system. The subsequent desire to add specialized document templates was permitted since, if necessary, the templates could easily be completely redeveloped on another word processor at an acceptable level of rework.

Licensing of the selected office product suite (Applixware) was available in a per-workstation version, but required a license key tied to the hostid. This was worse than a "branded" version, but much better than some "per-user" licenses. For instance, Microsoft Windows under WABI wouldnít reasonably (legally) support the dynamic addition and deletion of users. Since that time, a "branded" version of Applixware has been made available for Government use.

The preceding paragraphs sketch the thoughts going into ASAS use of COTS products (see Table 1). In todayís market, there is no difficulty in finding and using products that substantially meet requirements, the difficulty is in ensuring that the products are useful in the intended modes of operation. A word processor was used as a microcosm of this approach since it is inherently well-understood, yet has some subtleties. Some COTS questions suggested by this analysis are summarized in Figure 1.

|

|

COTS products provide challenges beyond simply invoking them. Ensuring one controls when they run, when they stop running, and how they interact with other features and capabilities of the system are paramount.

The runtime environment must be built with "enablers" to support COTS or externally-built products. Enablers allow products to take advantage of system features or services. This environment works against the traditional view that single-language systems are best. If, in fact, COTS and reuse are driving factors in the system, enablers must be provided that will work with every popular language. Under Unix, therefore, enabling functions are available in C (with C++ and FORTRAN using the C interfaces). Bindings or equivalent enabling functions are available in Ada; and binaries (which use command-line arguments to invoke the enabling functions) are callable from the shell (and thus from Common Desktop Environment (CDE) actions as well).

The philosophy for enablers is that they provide sufficient capability to allow a hosted application to have useful interaction with existing applications, and that no use of enablers is required as a precondition to hosting a new application.

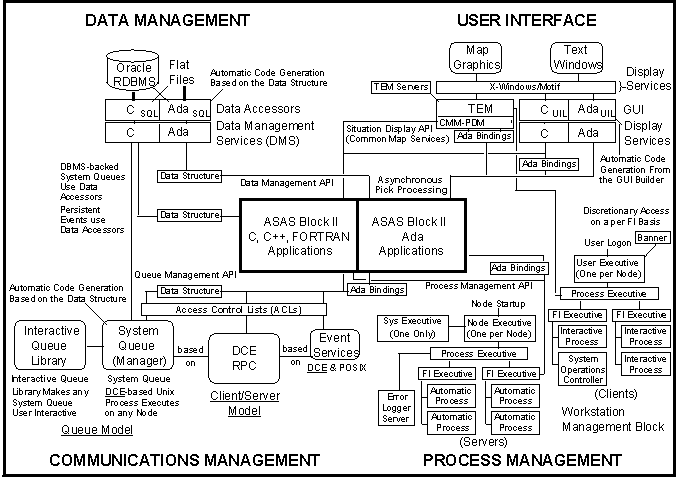

In Table 2, a description of the primary enabling techniques used in ASAS is provided. These techniques can be grouped as follows: process launching and management comprise the first 3 entries in the table, interprocess communications the next two, data management the next, and user interface services the last two.

|

Enabler |

Description |

|---|---|

| Process Management |

Controls how a process is run in the system.

Everything from its owner and group, to its priority, restart attempts,

and shutdown signal are managed. Also, for more integrated products, provides a way to launch or status a managed process. |

| User Notices | Provides a syslog-based mechanism to collect and distribute diagnostic, security, and user-level notifications and alarms. Uses a human engineering-controlled database of messages to allow post-development tuning of message content, delivery routing, and whether "pop-up" display is warranted. |

| CDE | Provides a common desktop environment for all applications. |

| System Queues |

Provides strongly typed prioritized LIFO or FIFO queues for data driven processing.

Queues can have backing store, and support both warm and cold starts, based on command line option.

Queues are built entirely by code generator from a data element description. |

| Event Services | Provides distributed notifications of occurrences in the system. Services are available for X loop, threaded, and synchronous notifications of events. |

| Database Accessors |

Provides a database-neutral (and file-neutral) way of presenting data to a developed application. Allows "papering over" schema differences, and preparing applications for data distribution and replication invisible to any developed code.

This capability uses a code generator. |

| Map Services | Allow any application to create and maintain map entities on any user-defined map, register for providing map service, register for notification of manipulation of map entities, and perform map manipulations |

| Commmon User-interface Dialogs | Support most common user interactions in standard ways. |

Figure 2 depicts these four "quadrants" of enablers that ASAS Block 2 has elected to implement. Each application that ASAS hosts has a different level of integration with the four quadrants; the overall level of integration can be seen to measure the level of "ASAS-enabling" for the application.

Figure 2: ASAS Block 2 Software Process Environment

The process management quadrant is used both for starting, stopping, prioritizing, and performing other management tasks on software in the distributed system and for allowing other processes to start, stop, and monitor processes without entangling themselves in process management policy. C, Ada, and binary services exist for process management.

Two other aspects of process management are CDE invocation (controlling where and how processes are invoked from the desktop), and how processes notify the operator of problems in a prescriptive way.

CDE actions often use an enabler to run the command under process management, which allows priority to be set and allows a set of related processes to start up in a predetermined order. Process management can also be used to limit the maximum number of concurrent executions of a process--if the number is exceeded, the desktop arranges for a currently running invocation to be "popped-up", rather than starting a new process.

Process notification uses the Unix syslog in ASAS. The messages, their priorities, and their distribution are managed in a data store that can be modified without changing the application. This allows human and operational engineering to "fine tune" messages, priority, and delivery points after some field experience has been gained with the software. Each operatorís notice window automatically filters messages for those pertaining to his or her current role. Process notification enablers are available in C, Ada, and binary forms.

The second quadrant of enablers is for inter-process communications. Traditionally, the ASAS work paradigm is one of a data driven module which awaits pending work, processes it, and queues it for the next such module. The system queue provides the required intermediary. System queues are strongly typed and entirely generated by an automated tool. C and Ada implementations exist for queue functions; some queues also have shell-script enablers. Distributed events provide notifications that something of interest to the application has occurred. C, Ada, and binary services exist for distributed events.

The third quadrant of enablers is the data management scheme, which hides the complexity, and the low-level implementation of data access from the program. Accessors are generated by automated tools, and both C and Ada interfaces are provided.

The fourth quadrant of enablers represents text and mapping services. Wrapping languages such as Tcl/Tk, interaction with a pseudo-terminal (pty) program, and use of the UIM/X interface builder are used to enable existing programs to have GUIs. Map services also fall into this quadrant, and these services were written specifically to allow any ASAS-hosted application to be able to put information on an analyst-selected map without becoming a map-owning application.

Of the enablers described, the process management portion has proven the most critical. This provides both the means for COTS processes to be controlled and managed properly, and also enables COTS products to launch ASAS processes under full management and control.

Tivoli Enterprise Management System, DCE, and CORBA address portions of the process management enabling services described here, and Transarcís Encina has similarities to the system queues presented for interprocess communications. These products hold out the promise that in the not-too-distant future, many of these enabling techniques will be available and integratable from COTS, with less "glueware" required.

Table 1 summarizes a representative sample of the COTS products used in the ASAS system, their purpose, and the elected level of integration.

Supported standards for COTS are important not only for the ones recognized in the System/Segment Specification (SSS), but also for emerging standards and industry trends, as illustrated in Figure 1. Has the vendor focused on those standards, or is content to establish its own standards? In the latter case, there is clearly a product to install, not to build on.

It is a fact of life that software vendors come and go--and only the most successful products continue. Over the life of the program many versions of the COTS will doubtless be installed--but it is better from a user training standpoint to limit the number of products per-se.

Programs like the Defense Information Initiative Common Operating Environment (DII COE) show that migrating a DOD-developed product between platforms may become the norm, rather than the exception. Fortunately, the X/Open group and others have standardized the APIs in Unix systems sufficiently to minimize the cost of porting between most systems, so COTS products tend to be available on all the major platforms.

Each selected COTS product can provide its function in a standalone environment, or can be integrated with other applications. Some COTS applications, like DCE, can pervade an entire system, while others, like Applix Data (described in section 3.3.4) can provide a supporting layer for a particular set of applications. The decision to integrate COTS into the system can make it expensive to remove or replace the product, and should not be made lightly. Figure 3 summarizes some of the questions that help in selection of COTS as a supporting productóone to insert, or as an enableróa product to build on.

|

|

ASAS Block 2 supports a distributed system. A number of servers are running on various workstations in the system, and a workstation-independent way of communicating with the servers was needed. Additionally, some kind of security mechanism both for ensuring integrity of the data and for accepting or denying connections was required. Finally, since the hardware platform for the final delivery was not yet fixed, and ASAS legacy systems included PC, Sun, HP, and DEC equipment, a hardware architecture-independent protocol was indicated.

Among the candidates, DCE stood out as the most versatile product. The product was championed by the OSF and was based on the TIRPC of Apollo.

ASAS Block 2 adopted DCE as a product to build on. As an architectural policy, all new distributed client/server communications were expected to be provided by the DCE RPC mechanism. In general, this has led to robust, workstation-independent communications between ASAS clients and servers.

ASAS use of DCE caused some unforeseen problems when the ATCCS community adopted DCE as the communications transport among battlefield functional areas (BFA) in the Task Force XXI (TFXXI) advanced warfighter experiment (AWE). The ASAS DCE Cell Directory Service (CDS) standards were different than the developing ATCCS standards, and worse, multiple RWS-C enclaves were forced into a single DCE cell, something they werenít designed for. In addition, the approach chosen for DCE use in ATCCS often wiped out cell information entirely and rebuilt it from scratch, losing DCE principals and other authentication information.

The focus on API-based services proved beneficial in adapting to this unfortunate situation; all DCE services registered with a common routine, and all connections were done with a similar routine. Changes in these routines were the major portion of the rework required to sidestep this violation of architectural assumptions.

When ASAS Block 2 was awarded, the United States Army was still in the procurement cycle for Common Hardware/Software 2 (CHS-2), a bulk purchase of Army computer needs. The hardware and software for ASAS were literally unknown.

Thus, ASAS had to be prepared to port its software to a different platform, different COTS products, and different compilers within 18 months after contract award.

Yet, the processing heart of ASAS is its DBMS; any progress had to be measured in terms of database transactions for the background processing (and maps for the user).

Thus, ASAS selected a strategy that allowed the database to be hidden from the applications while preserving performance. This strategy was called "database accessors." No new application was permitted to place SQL statements in its code; instead, an interface would be agreed to between the application and a special database group. The application programmer could program to the accessor immediately after the interface was agreed to, while the database group had an opportunity to understand the DBMS requirements of the system, designing the system with appropriate normalization and indices and eliminating unnecessary redundancy.

The accessor technique was refined from one developed for independent research and development (IR&D) work and applied on the Granite Sentry Air Force program. The same techniques were refined again and coupled with automated generation tools and used on the ASAS program.

The accessor technique was also required for file access, since the interface from the application programmerís perspective was the same, and migration from local file access to database access became a standard technique in preparing legacy applications for distribution.

The underlying support layer was structured for migration to the objective systemís requirement to support replication and failover.

One of the tasks of ASAS is dissemination of intelligence data in an image format. This can be annotated national imagery, annotated situation maps, UAV stills or clips, and similar image types. The exchange standard format for these images is NITF (which supports location registration information), though some alternates are supported.

Important features of the imagery viewer were similarity to products used in systems ASAS users may encounter, ability to launch product from another program or from the Netscape browser, ability to limit the number of copies launched, and ability to support the various formats that ASAS users required.

Since the product was to remain compliant with products used for other programs, this product was not considered a product to build upon, but rather one to simply insert. Other than resource file customization, no ASAS integration was performed.

ASAS used ELT3000ís Tooltalk API to launch at most one copy of the image viewer, and to populate its thumbnail collection with the various images sent to it. The product is used to view and annotated maps treated as images.

If ASAS is to the user a map-centric system, to its internal processing it is a database-centric system. From message processing to analysis to message generation, the intermediate results are stored and manipulated in the database.

Therefore every analytical table in the database must be queryable (within role-based privileges) and many of the actions performed from the map must be capable of being performed on the results of a data query. An additional requirement in this area is that an expert ASAS user must have the ability to edit the data in any analytic table to correct the stored value.

The choices for implementation were to carry the ASAS Block I approach forward, or to base an implementation on available COTS. The ASAS Block I approach was to create a set of general services and a set of unique forms, one for each set of tables queried. A COTS-based approach would give a general set of capabilities for all tables, and add unique forms for common tasks. ASAS chose the latter course. Therefore, the analysts started with the ability to query or update any table in the database (if the user was in a group with privilege to do so), along with all the COTS capabilities to select and manipulate columns, sort and filter data, lock rows for update, and print results.

The Applix Data product provided an extensible data query tool that could attach to any of the databases considered in the hardware procurement for the US Army (CHS-2). By providing a background process and using available Applix macros, all ASAS customizations could be realized.

The COTS product was extended to:

The Applix Data product lies somewhere between products that are run with integration enhanced by enablers and products that are themselves enablers. As an enabled product, it takes advantage of system queues, event services, and process management to perform its tasks; as an enabler, it permits any added database schema to have more general functionality in the system without additional programming.

As one would expect, hosting tools built by external developers has many of the same issues as COTS tools. However, since these tools are likely very mission specific, and likely must interact with other applications, these tools may add requirements to existing services, may require unification of data schemes with the data on the system, and may be expected to have similar menus, options, and behavior to other functions in the system.

For each of the "enabler" areas a strategy is determined to make the application be as well-integrated as desired. Table 3 summarizes some of the external developer software used in ASAS, and the techniques used to integrate it.

| Application | Description | Integration Technique |

|---|---|---|

| Core Analyst Tool Set (CATS) |

C++ applications to aid in identification and categorization of radars and radios.

SSPS program product | Partial process management, user interface, and data schema integration in first phase; database accessor and full map integration in third phase |

| IREMBASS |

C application to manage a legacy sensor

Army BCBL product | Insertion integration only |

| Data Fusion Server (DFS) |

C and Oracle Form application to support doctrinal and situational templates

JPL product | Change from map owner to map client. Otherwise, insertion integration only |

| Terrain Evaluation Module (TEM) | C and FORTRAN map product. Legacy reusable Army mapping product MITRE/WES product | Fully integrate. Wrap mapping product into a server, and have all mapping users link to the server library. Server library emulates relevant TEM API precisely, and extends. |

| Sensor Placement Analyzer (SPA) |

C++ applications evaluate and plan sensor placement.

University of Virginia product | Integrate into work flow. Link with emulation map library to display/work on common map. Migrate to get data from common GIS |

| QUIPS |

C application to aid in scheduling assets for collection

JPL product | Integrate into work flow. Link with emulation map library to display/work on common map. Provide data management service to track asset status against automatic message update |

| Task Force XXI client/server products |

Sets of applications in C, C++, Ada, and shell scripts to support the TFXXI advanced warfighter experiment

Various Army and contractor organizations | Arrange process-managed invocation. Rapidly integrate due to frequent updates and short turnaround times. |

Three of the products chosen for use in ASAS Block 2 exemplify different working relationships and incorporation strategies.

- TEM case study

- It allowed associate contractors to build products against the map independently;

- It limited coding impact of changes in map code to two CSCs (the map block GFS was managed in a different CSC than the DCE RPC server), and generally fixes did not require recompilation of map usersí code;

- It allowed creation of compatibility APIs to other map blocks, such as PDM; and

- It will allow migration to JMTK, which has been selected as the Joint Services map interface. The map server product is referred to as the common map manager (CMM).

- Establish working relationship with developer and maintainer

- Establish source code baseline for debug and increasing effort at fixing erroneous code

- Keep database dependencies from the map server

- Make use of product as DCE RPC server to isolate changes and enable other products to integrate with maps

- Keep RPC server as migration path to JMTK

- CATS case study

- Create map compatibility library to minimize development baseline breakage

- Create DBMS compatibility layer to minimize development baseline breakage

- Add logic to find automatic (ONC RPC) processes to enable CATS to become a multi-node product

- Migrate error and status messages from display on standard output to ASAS error logging

- SPA case study

- Use map compatibility library to minimize changes to software baseline

- Migrate product to use existing GIS to enable standard DMA products to support all map-related tools.

- Add bitmap-overlay capability to map server to provide SPA-required service

- Migrate standard output error notification to log-based notifications

The Terrain Evaluation Module (TEM) is a product originally developed by the Waterways Experiment Station (WES) of the Army Corps of Engineers. MITRE determined that this product was the best available and would form the basis of Army mapping systems. Thus TEM was made a part of the Common ATCCS System Software (CASS), and formed a required basis for the ASAS Block 2 efforts.

Since, at that time, TEM was still an immature product, ASAS set up a working relationship with both MITRE, who was at that time maintaining the product, and WES, who was still providing the terrain and GIS features of the product. The ASAS system centers around the map from the analystís perspective, so map stability was an absolute must.

This relationship included source code with the understanding that all required changes would flow back to MITRE for incorporation into later releases of the product.

This relationship resulted in a much more stable TEM product for all Army users, and a product that ASAS could rely on for building the system.

TEM had a number of architectural flaws that made it more difficult to build the ASAS system than necessary. Foremost, it required each using application to manage a map. This was unacceptable to ASAS, since many different applications had to be able to render product onto a single map, and multiple maps had to be supported.

This led ASAS to integrate TEM by creating a DCE RPC server which accepted commands (preserving the TEM API) from any application and rendered the output onto any of a set of maps managed by that server. This effectively divided the map product producers from the map management task, and had several other benefits as well:

Figure 4 summarizes the TEM integration strategy.

|

|

TEM itself was enabled through the use of the process management services; TEMís six servers initially had a tendency to exit abnormally; use of process management allowed such failures to be recovered almost immediately, with no action required by the user.

Thus, the TEM product migrated from a product using enabling services to incorporation into a product that actually provided them.

The CATS product is a C++ product used in the Single Source Processor SIGINT (SSPS). It has components for working with radio and radar reports, for browsing reference databases and for analyzing results both textually and on the map.

CATS is a layered, structured product. As such, the challenges in integration were in keeping the SSPS baseline and the ASAS baseline as similar as possible, while working with different database systems, different mapping systems, and different process management policies. This was successfully accomplished through the use of #ifdef blocks in the code, and compatibility layers built over the ASAS code. Building a compatibility layer for the SSPS mapping system was seen as a strategic asset since SSPS and a number of other products could more easily port to ASAS if it had an emulator for the most popular of the PDM mapping calls. The DBMS objects invoked the ASAS DBMS layer to support all server lookup and failover functions without changing the SSPS implementation.

The SSPS product was also written as a standalone application; use of the ASAS process management enabler allowed the processing to be distributed across several nodes, and several analysts are able to share the data produced.

In consideration for the common baseline, conversion of the ONC RPC communications was not considered for change in ASAS for any existing functionality.

An interesting performance discovery we made was that background processes logging is redirected to the console; since this console is available to the user through a read-only window, every output message is rendered by X on the window, which can lead to substantial, measurable performance impact! Process management was used to "ground" this output, and performance immediately increased.

Figure 5 summarizes the CATS integration strategy.

|

|

The sensor placement algorithm was developed at the University of Virginia (UVA) and produced by Commonwealth Computer Research (CCR) to select optimal sensor placement locations and to evaluate and show coverage on a map.

SPA is a C++ application built to stand alone. SPA displayed on its own map, used its own pre-processed digitized terrain elevation data, and prepared its product visually with no other interaction available. The challenge in ASAS was not only to successfully host the application, but to fit it into the asset managerís workflow in a useful way.

SPA coverage overlays were only of use if they could be displayed over a common map; since these were calculated as bitmaps, an interface had to be provided to allow a georegistered bitmap to be placed over the common map.

We chose the integration strategy outlined in Figure 6. We provided a georegistered bitmap call in the CMM to enable SPA to draw on the common map. Over several releases, we converted SPA DTED access to use the same GIS as other ASAS applications; and we began identifying and calling out the specific products of the SPA process that would need to interact with other applications in the asset management discipline.

|

|

The process of integrating a product successfully into the task flow may take several iterations, as we clearly learned in the case of SPA. The collection managerís job of managing requirements, tracking assets, allocating tasks to assets, determining target locations for assets, scheduling the assets for their tasks, and synchronizing the tasks and the requirements needs particular data and control flow that is not easy to achieve. In the first iteration, the tools were kept standalone, and we assumed the operator would be trained to use them properly and in the correct order.

Experience in the hands of the users indicated the fallacies in this approach, and a more doctrinally-induced flow between the tools supporting these tasks was implemented.

This experience also had positive and negative features, and it seems likely a third iteration will be attempted.

From an infrastructure standpoint this is ideal--the tools for supporting proper interaction are present; the tools must be configured several times to make the interactions most useful.

COTS , GFS, and other reuse software can dramatically reduce the cost and schedule to implement useful capabilities in a software system. However, care must be taken in selection from among a set of products, and balance must be achieved between the capabilities of the product, the needs of the system, and level of desired integration.

Selection of appropriate enabling techniques allow COTS, GFS, and other reuse software to be quickly and usefully integrated in the system, with directed efforts substantially increasing capability.